Prompt Engineering for Large Language Models: Tips & Tricks

Table of Contents

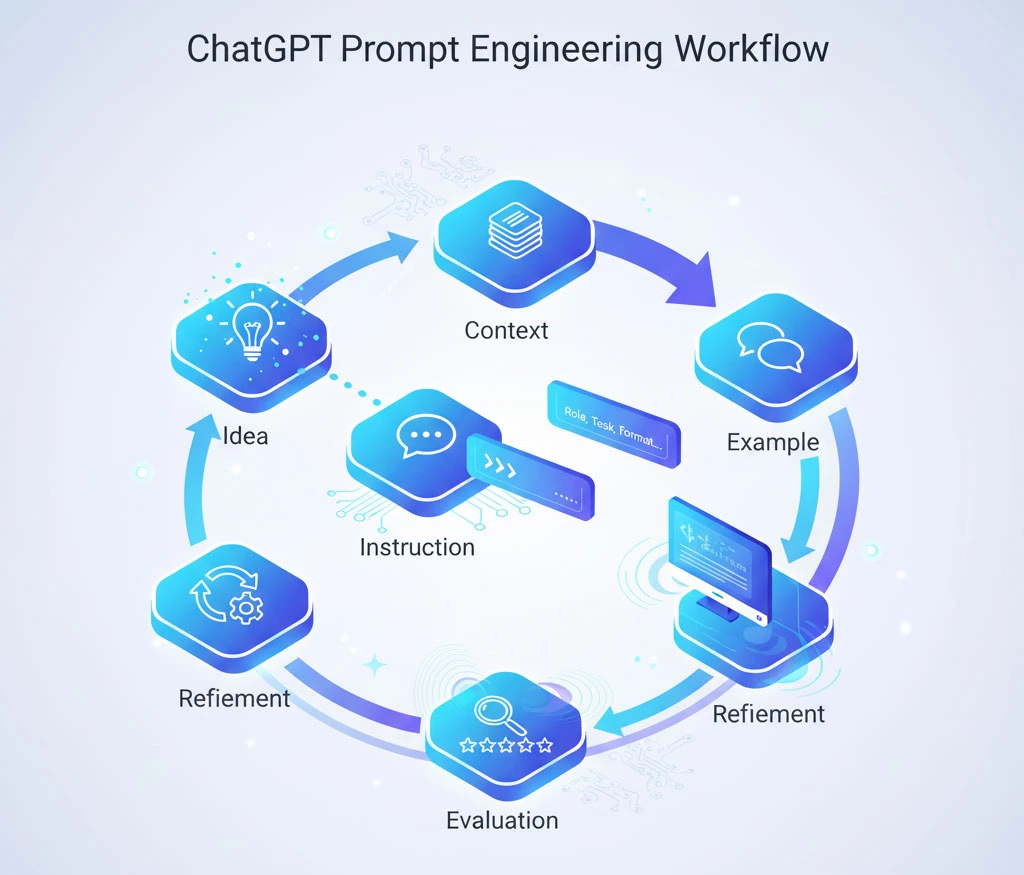

Large language models have revolutionized how we interact with artificial intelligence, but getting the best results requires more than just typing a question. Prompt engineering, the art and science of crafting effective instructions for AI models can make the difference between mediocre outputs and truly impressive results.

Whether you’re working with ChatGPT, Claude, or other LLMs, mastering prompt engineering techniques will help you unlock their full potential. This guide covers practical strategies that developers, content creators, and business professionals can use to improve their AI interactions immediately.

From basic instruction writing to advanced methods like chain-of-thought prompting, you’ll discover how to communicate more effectively with AI systems and achieve consistent, high-quality results.

Basic Prompt Engineering for LLMs

Write Clear and Specific Instructions

The foundation of effective prompt engineering lies in clarity. Vague prompts often produce vague results, while specific instructions guide the model toward your desired outcome.

Instead of asking “Write about marketing,” try “Write a 300-word blog introduction about email marketing best practices for small business owners.” This approach provides the model with clear parameters: topic, length, format, and target audience.

When crafting instructions, include details about:

- Format: Specify if you want bullet points, paragraphs, or structured lists

- Tone: Request formal, casual, or conversational language

- Length: Provide word counts or approximate lengths

- Audience: Define who will read or use the output

Use Delimiters for Complex Prompts

Delimiters help organize complex prompts and prevent confusion when dealing with multiple sections or examples. Common delimiters include triple quotes (“””), angle brackets (<>), or XML-style tags.

For example:

Analyze this customer feedback and provide insights:

The product arrived quickly, but the packaging was damaged. The item itself works fine, though the setup instructions were confusing. Overall, I’m satisfied but expected a better presentation.

Please categorize the feedback into: shipping, packaging, product quality, and documentation.

This structure makes it clear where your input ends and the task begins.

Provide Relevant Context

Context helps models understand the situation and adjust their responses accordingly. When seeking business advice, mention your industry and company size. For technical questions, specify your experience level and the tools you’re using.

Effective context includes

- Background information: Relevant details about your situation

- Constraints: Budget limits, time restrictions, or technical requirements

- Goals: What you’re trying to achieve with the output

Advanced Prompt Engineering for ChatGPT & LLMs

Few-Shot Learning

Few-shot learning involves providing examples of the desired output format before asking for new content. This technique helps models understand patterns and maintain consistency across responses.

Here’s a few-shot prompt for product descriptions:

Write product descriptions following these examples:

Product: Wireless Headphones

Description: Experience crystal-clear audio with our premium wireless headphones.

Featuring 30-hour battery life and noise cancellation technology.Product: Smartphone Case

Description: Protect your device with our durable smartphone case. Military-grade

materials provide maximum protection without adding bulk.Product: Coffee Maker

Description: [Generate description here]

This approach works particularly well for maintaining consistent tone, structure, or formatting across multiple outputs.

Chain-of-Thought Prompting

Chain-of-thought prompting encourages models to break down complex problems into logical steps. This technique improves accuracy for reasoning tasks and makes the model’s thinking process transparent.

Instead of asking “What’s the ROI of this marketing campaign?” try:

Calculate the ROI for this marketing campaign using step-by-step reasoning:

Campaign cost: $5,000

Revenue generated: $15,000Additional metrics to consider: customer acquisition cost, lifetime value

Please show your work and explain each calculation step.

This approach helps ensure accurate calculations and allows you to verify the logic behind the results.

Self-Consistency Methods

Self-consistency involves asking the model to generate multiple responses to the same prompt, then identifying common themes or the most reliable answer. This technique proves especially valuable for complex decisions or creative tasks.

You might prompt: “Generate three different approaches to solving this customer service issue, then recommend which approach would be most effective and explain why.”

This method helps validate responses and provides multiple perspectives on challenging problems.

Tools & Frameworks for ChatGPT Prompt Engineering

Several platforms can enhance your prompt engineering workflow and help you achieve better results consistently.

Prompt Libraries and Templates

- PromptBase: A marketplace for buying and selling prompts across different AI models

- OpenAI Cookbook: Free examples and guides for GPT prompting techniques

- Anthropic’s Claude documentation: Comprehensive guides for prompt engineering with Claude

Testing and Optimization Platforms

- PromptPerfect: Tools for testing and optimizing prompts across multiple LLMs

- Weights & Biases: Experiment tracking for prompt engineering projects

- LangSmith: Debugging and testing platform for language model applications

AI Consulting for LLM optimization can provide customized solutions for businesses looking to implement advanced prompting strategies at scale. Professional services often include prompt template development, model fine-tuning guidance, and integration support.

Development Frameworks

- LangChain Python framework for building LLM applications with advanced prompting capabilities

- Semantic Kernel: Microsoft’s SDK for integrating AI services with conventional programming languages

- Haystack: End-to-end framework for building search systems and question-answering applications

These tools become particularly valuable when implementing Generative AI Development Services across multiple business functions or when scaling prompt engineering efforts across teams.

Frequently Asked Questions

How do I write better ChatGPT prompts?

Write clear, specific, and contextual prompts. Define the tone, length, and audience, and use examples or step-by-step reasoning to guide ChatGPT toward accurate results.

What are examples of ChatGPT prompt improvements?

A strong ChatGPT prompt clearly defines the objective and structure. For example:

- Weak: “Write about AI.”

- Improved: “Write a 400-word blog post explaining how small businesses can use AI chatbots to improve customer service, using a friendly and informative tone.”

What is prompt engineering in large language models?

Prompt engineering is the practice of designing and optimizing text inputs to guide large language models toward producing desired outputs. It involves crafting clear instructions, providing appropriate context, and using specific techniques to improve response quality and consistency. Effective prompt engineering can dramatically improve AI model performance without requiring technical modifications to the underlying system.

How can AI Consulting improve prompt engineering results?

AI Consulting services provide expertise in advanced prompting strategies, model selection, and integration best practices. Consultants can help businesses develop standardized prompt templates, implement quality control processes, and optimize workflows for specific use cases. They also offer training on ChatGPT prompt writing tips and other platform-specific techniques that maximize return on AI investments.

What tools are available for optimizing LLM prompts?

Several categories of tools support prompt optimization: testing platforms like PromptPerfect allow comparison across different models, development frameworks like LangChain enable programmatic prompt management, and documentation resources provide proven templates and techniques. Many tools offer analytics features that track prompt performance and suggest improvements based on usage patterns.

Master Your AI Interactions

Effective prompt engineering transforms large language models from impressive tech demos into practical business tools. The techniques covered here from basic instruction writing to advanced methods like chain-of-thought prompting provide a foundation for getting consistently better results from AI systems.

Start by implementing clear, specific instructions in your current AI workflows. Experiment with few-shot examples for tasks requiring consistent formatting. For complex problems, try breaking them down using chain-of-thought approaches.

Ready to take your AI implementation to the next level? Consider exploring best practices for AI model prompts through hands-on experimentation with different techniques. The investment in learning proper prompt engineering pays dividends in improved efficiency and output quality across all your AI-powered projects.